Note

This page is a reference documentation. It only explains the class signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.maskers.NiftiSpheresMasker¶

- class nilearn.maskers.NiftiSpheresMasker(seeds=None, radius=None, mask_img=None, allow_overlap=False, smoothing_fwhm=None, standardize=False, standardize_confounds=True, high_variance_confounds=False, detrend=False, low_pass=None, high_pass=None, t_r=None, dtype=None, memory=None, memory_level=1, verbose=0, reports=True, clean_args=None)[source]¶

Class for masking of Niimg-like objects using seeds.

NiftiSpheresMasker is useful when data from given seeds should be extracted.

Use case: summarize brain signals from seeds that were obtained from prior knowledge.

- Parameters:

- seeds

listof triplet of coordinates in native space or None, default=None Seed definitions. List of coordinates of the seeds in the same space as the images (typically MNI or TAL).

- radius

float, default=None Indicates, in millimeters, the radius for the sphere around the seed. By default signal is extracted on a single voxel.

- mask_imgNiimg-like object, default=None

See Input and output: neuroimaging data representation. Mask to apply to regions before extracting signals.

- allow_overlap

bool, default=False If False, an error is raised if the maps overlaps (ie at least two maps have a non-zero value for the same voxel).

- smoothing_fwhm

floatorintor None, optional. If smoothing_fwhm is not None, it gives the full-width at half maximum in millimeters of the spatial smoothing to apply to the signal.

- standardizeany of: ‘zscore_sample’, ‘zscore’, ‘psc’, True, False or None; default=False

Strategy to standardize the signal:

'zscore_sample': The signal is z-scored. Timeseries are shifted to zero mean and scaled to unit variance. Uses sample std.'psc': Timeseries are shifted to zero mean value and scaled to percent signal change (as compared to original mean signal).True: The signal is z-scored (same as option zscore). Timeseries are shifted to zero mean and scaled to unit variance.Deprecated since Nilearn 0.13.0: In nilearn version 0.15.0,

Truewill be replaced by'zscore_sample'.False: Do not standardize the data.Deprecated since Nilearn 0.13.0: In nilearn version 0.15.0,

Falsewill be replaced byNone.

Deprecated since Nilearn 0.13.0: The default will be changed to

Nonein version 0.15.0.- standardize_confounds

bool, default=True If set to True, the confounds are z-scored: their mean is put to 0 and their variance to 1 in the time dimension.

- high_variance_confounds

bool, default=False If True, high variance confounds are computed on provided image with

nilearn.image.high_variance_confoundsand default parameters and regressed out.- detrend

bool, optional Whether to detrend signals or not.

- low_pass

floatorintor None, default=None Low cutoff frequency in Hertz. If specified, signals above this frequency will be filtered out. If None, no low-pass filtering will be performed.

- high_pass

floatorintor None, default=None High cutoff frequency in Hertz. If specified, signals below this frequency will be filtered out.

- t_r

floatorintor None, default=None Repetition time, in seconds (sampling period). Set to None if not provided.

- dtypedtype like, “auto” or None, default=None

Data type toward which the data should be converted. If “auto”, the data will be converted to int32 if dtype is discrete and float32 if it is continuous. If None, data will not be converted to a new data type.

- memoryNone, instance of

joblib.Memory,str, orpathlib.Path, default=None Used to cache the masking process. By default, no caching is done. If a

stris given, it is the path to the caching directory.- memory_level

int, default=1 Rough estimator of the amount of memory used by caching. Higher value means more memory for caching. Zero means no caching.

- verbose

boolorint, default=0 Verbosity level (

0orFalsemeans no message).- reports

bool, default=True If set to True, data is saved in order to produce a report.

- clean_args

dictor None, default=None Keyword arguments to be passed to

cleancalled within the masker. Withinclean, kwargs prefixed with'butterworth__'will be passed to the Butterworth filter.Added in Nilearn 0.12.0.

- seeds

- Attributes:

- clean_args_

dict Keyword arguments to be passed to

cleancalled within the masker. Withinclean, kwargs prefixed with'butterworth__'will be passed to the Butterworth filter.- mask_img_A 3D binary

nibabel.nifti1.Nifti1Imageor None. The mask of the data. If no

mask_imgwas passed at masker construction, thenmask_img_isNone, otherwise is the resulting binarized version ofmask_imgwhere each voxel isTrueif all values across samples (for example across timepoints) is finite value different from 0.- memory_joblib memory cache

- n_elements_

int The number of seeds in the masker.

Added in Nilearn 0.9.2.

- seeds_

listoflist The coordinates of the seeds in the masker.

- clean_args_

See also

- __init__(seeds=None, radius=None, mask_img=None, allow_overlap=False, smoothing_fwhm=None, standardize=False, standardize_confounds=True, high_variance_confounds=False, detrend=False, low_pass=None, high_pass=None, t_r=None, dtype=None, memory=None, memory_level=1, verbose=0, reports=True, clean_args=None)[source]¶

- fit(imgs=None, y=None)[source]¶

Compute the mask corresponding to the data.

- Parameters:

- imgs

listof Niimg-like objects or None, default=None See Input and output: neuroimaging data representation. Data on which the mask must be calculated. If this is a list, the affine is considered the same for all.

- yNone

This parameter is unused. It is solely included for scikit-learn compatibility.

- imgs

- fit_transform(imgs, y=None, confounds=None, sample_mask=None)[source]¶

Prepare and perform signal extraction.

- Parameters:

- imgs3D/4D Niimg-like object

See Input and output: neuroimaging data representation. Images to process.

- yNone

This parameter is unused. It is solely included for scikit-learn compatibility.

- confounds

numpy.ndarray,str,pathlib.Path,pandas.DataFrameorlistof confounds timeseries, default=None This parameter is passed to

nilearn.signal.clean. Please see the related documentation for details. shape: (number of scans, number of confounds)- sample_maskAny type compatible with numpy-array indexing, default=None

shape = (total number of scans - number of scans removed)for explicit index (for example,sample_mask=np.asarray([1, 2, 4])), orshape = (number of scans)for binary mask (for example,sample_mask=np.asarray([False, True, True, False, True])). Masks the images along the last dimension to perform scrubbing: for example to remove volumes with high motion and/or non-steady-state volumes. This parameter is passed tonilearn.signal.clean.Added in Nilearn 0.8.0.

- Returns:

- signals

numpy.ndarray,pandas.DataFrameor polars.DataFrame Signal for each element.

Changed in Nilearn 0.13.0: Added

set_outputsupport.The type of the output is determined by

set_output(): see the scikit-learn documentation.Output shape for :

For Numpy outputs:

3D images: (number of elements,)

4D images: (number of scans, number of elements) array

For DataFrame outputs:

3D or 4D images: (number of scans, number of elements) array

- signals

- generate_report(displayed_spheres='all', title=None)[source]¶

Generate an HTML report for current

NiftiSpheresMaskerobject.Note

This functionality requires to have

Matplotlibinstalled.- Parameters:

- displayed_spheres

int,ndarrayorlistofint, or “all”, default=10 Indicates which spheres will be displayed in the HTML report.

If

"all": All spheres will be displayed in the report.

masker.generate_report("all")

masker.generate_report([6, 3, 12])

- If an

int: This will only display the first n spheres, n being the value of the parameter. By default, the report will only contain the first 10 spheres. Example to display the first 16 spheres:

- If an

masker.generate_report(16)

- title

stror None, default=None title for the report. If None, title will be the class name.

- displayed_spheres

- Returns:

- reportnilearn.reporting.html_report.HTMLReport

HTML report for the masker.

- get_feature_names_out(input_features=None)¶

Get output feature names for transformation.

The feature names out will prefixed by the lowercased class name. For example, if the transformer outputs 3 features, then the feature names out are: [“class_name0”, “class_name1”, “class_name2”].

- Parameters:

- input_featuresarray-like of str or None, default=None

Only used to validate feature names with the names seen in fit.

- Returns:

- feature_names_outndarray of str objects

Transformed feature names.

- get_metadata_routing()¶

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)¶

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- inverse_transform(region_signals)[source]¶

Compute voxel signals from spheres signals.

Any mask given at initialization is taken into account. Throws an error if

mask_img==None- Parameters:

- signals1D/2D

numpy.ndarray Extracted signal. If a 1D array is provided, then the shape should be (number of elements,). If a 2D array is provided, then the shape should be (number of scans, number of elements).

- signals1D/2D

- Returns:

- img

nibabel.nifti1.Nifti1Image Transformed image in brain space. Output shape for :

1D array : 3D

nibabel.nifti1.Nifti1Imagewill be returned.2D array : 4D

nibabel.nifti1.Nifti1Imagewill be returned.

- img

- set_fit_request(*, imgs='$UNCHANGED$')¶

Configure whether metadata should be requested to be passed to the

fitmethod.Note that this method is only relevant when this estimator is used as a sub-estimator within a meta-estimator and metadata routing is enabled with

enable_metadata_routing=True(seesklearn.set_config). Please check the User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed tofitif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it tofit.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

- Parameters:

- imgsstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

imgsparameter infit.

- Returns:

- selfobject

The updated object.

- set_inverse_transform_request(*, region_signals='$UNCHANGED$')¶

Configure whether metadata should be requested to be passed to the

inverse_transformmethod.Note that this method is only relevant when this estimator is used as a sub-estimator within a meta-estimator and metadata routing is enabled with

enable_metadata_routing=True(seesklearn.set_config). Please check the User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed toinverse_transformif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it toinverse_transform.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

- Parameters:

- region_signalsstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

region_signalsparameter ininverse_transform.

- Returns:

- selfobject

The updated object.

- set_output(*, transform=None)¶

Set output container.

See Introducing the set_output API for an example on how to use the API.

- Parameters:

- transform{“default”, “pandas”, “polars”}, default=None

Configure output of transform and fit_transform.

“default”: Default output format of a transformer

“pandas”: DataFrame output

“polars”: Polars output

None: Transform configuration is unchanged

Added in version 1.4: “polars” option was added.

- Returns:

- selfestimator instance

Estimator instance.

- set_params(**params)¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

- set_transform_request(*, confounds='$UNCHANGED$', imgs='$UNCHANGED$', sample_mask='$UNCHANGED$')¶

Configure whether metadata should be requested to be passed to the

transformmethod.Note that this method is only relevant when this estimator is used as a sub-estimator within a meta-estimator and metadata routing is enabled with

enable_metadata_routing=True(seesklearn.set_config). Please check the User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed totransformif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it totransform.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

- Parameters:

- confoundsstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

confoundsparameter intransform.- imgsstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

imgsparameter intransform.- sample_maskstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

sample_maskparameter intransform.

- Returns:

- selfobject

The updated object.

- transform(imgs, confounds=None, sample_mask=None)[source]¶

Apply mask, spatial and temporal preprocessing.

- Parameters:

- imgs3D/4D Niimg-like object

See Input and output: neuroimaging data representation. Images to process. If a 3D niimg is provided, a 1D array is returned.

- confounds

numpy.ndarray,str,pathlib.Path,pandas.DataFrameorlistof confounds timeseries, default=None This parameter is passed to

nilearn.signal.clean. Please see the related documentation for details. shape: (number of scans, number of confounds)- sample_maskAny type compatible with numpy-array indexing, default=None

shape = (total number of scans - number of scans removed)for explicit index (for example,sample_mask=np.asarray([1, 2, 4])), orshape = (number of scans)for binary mask (for example,sample_mask=np.asarray([False, True, True, False, True])). Masks the images along the last dimension to perform scrubbing: for example to remove volumes with high motion and/or non-steady-state volumes. This parameter is passed tonilearn.signal.clean.Added in Nilearn 0.8.0.

- Returns:

- signals

numpy.ndarray,pandas.DataFrameor polars.DataFrame Signal for each element.

Changed in Nilearn 0.13.0: Added

set_outputsupport.The type of the output is determined by

set_output(): see the scikit-learn documentation.Output shape for :

For Numpy outputs:

3D images: (number of elements,)

4D images: (number of scans, number of elements) array

For DataFrame outputs:

3D or 4D images: (number of scans, number of elements) array

- signals

- transform_single_imgs(imgs, confounds=None, sample_mask=None)[source]¶

Extract signals from a single 4D niimg.

- Parameters:

- imgs3D/4D Niimg-like object

See Input and output: neuroimaging data representation. Images to process.

- confounds

numpy.ndarray,str,pathlib.Path,pandas.DataFrameorlistof confounds timeseries, default=None This parameter is passed to

nilearn.signal.clean. Please see the related documentation for details. shape: (number of scans, number of confounds)- sample_maskAny type compatible with numpy-array indexing, default=None

shape = (total number of scans - number of scans removed)for explicit index (for example,sample_mask=np.asarray([1, 2, 4])), orshape = (number of scans)for binary mask (for example,sample_mask=np.asarray([False, True, True, False, True])). Masks the images along the last dimension to perform scrubbing: for example to remove volumes with high motion and/or non-steady-state volumes. This parameter is passed tonilearn.signal.clean.Added in Nilearn 0.8.0.

- Returns:

- signals

numpy.ndarray,pandas.DataFrameor polars.DataFrame Signal for each element.

Changed in Nilearn 0.13.0: Added

set_outputsupport.The type of the output is determined by

set_output(): see the scikit-learn documentation.Output shape for :

For Numpy outputs:

3D images: (number of elements,)

4D images: (number of scans, number of elements) array

For DataFrame outputs:

3D or 4D images: (number of scans, number of elements) array

- signals

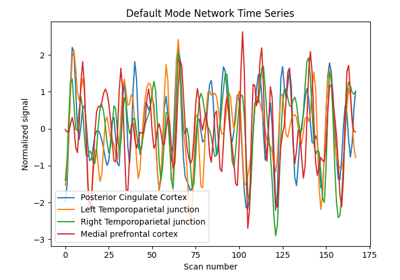

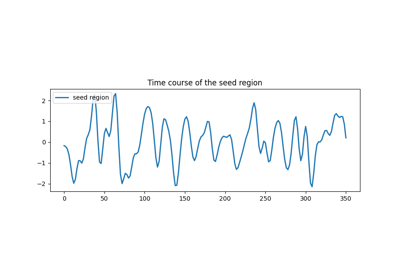

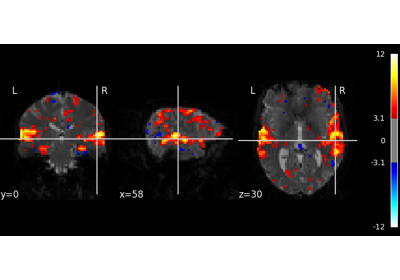

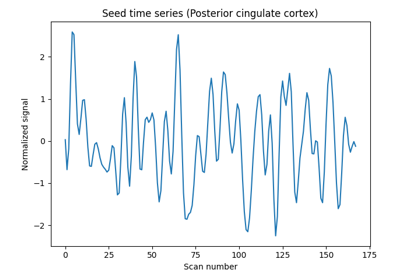

Examples using nilearn.maskers.NiftiSpheresMasker¶

Producing single subject maps of seed-to-voxel correlation

Beta-Series Modeling for Task-Based Functional Connectivity and Decoding