Note

This page is a reference documentation. It only explains the function signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.image.concat_imgs¶

- nilearn.image.concat_imgs(niimgs, dtype=<class 'numpy.float32'>, ensure_ndim=None, memory=None, memory_level=0, auto_resample=False, verbose=0)[source]¶

Concatenate a list of images of varying lengths.

The image list can contain:

Niimg-like objects of varying dimensions (i.e., 3D or 4D) as well as different 3D shapes and affines, as they will be matched to the first image in the list if

auto_resample=True.surface images of varying dimensions (i.e., 1D or 2D) but with same number of vertices

- Parameters:

- niimgsiterable of Niimg-like objects, or glob pattern, or

listortupleofSurfaceImageobject See Input and output: neuroimaging data representation. Images to concatenate.

- dtypenumpy dtype, default=np.float32

The dtype of the returned image.

- ensure_ndim

int, default=None Indicate the dimensionality of the expected niimg. An error is raised if the niimg is of another dimensionality. Ignored for

SurfaceImage.- auto_resample

bool, default=False Converts all images to the space of the first one. Ignored for

SurfaceImage.- verbose

boolorint, default=0 Verbosity level (

0orFalsemeans no message).- memoryNone, instance of

joblib.Memory,str, orpathlib.Path, default=None Used to cache the masking process. By default, no caching is done. If a

stris given, it is the path to the caching directory. Ignored forSurfaceImage.- memory_level

int, default=0 Rough estimator of the amount of memory used by caching. Higher value means more memory for caching. Zero means no caching. Ignored for

SurfaceImage.

- niimgsiterable of Niimg-like objects, or glob pattern, or

- Returns:

- concatenated

Nifti1ImageorSurfaceImage A single image.

- concatenated

See also

Examples using nilearn.image.concat_imgs¶

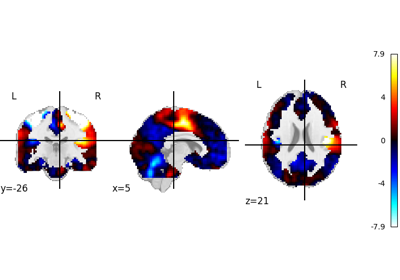

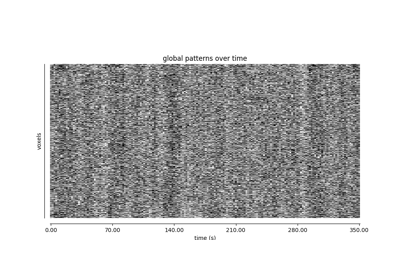

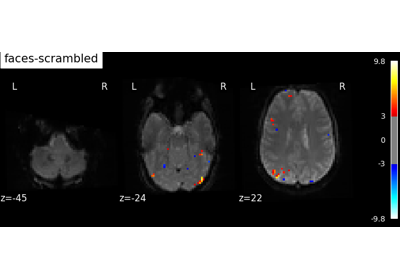

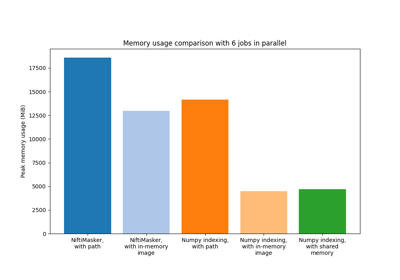

Beta-Series Modeling for Task-Based Functional Connectivity and Decoding