Note

Go to the end to download the full example code or to run this example in your browser via Binder.

Second-level fMRI model: two-sample test, unpaired and paired¶

Full step-by-step example of fitting a GLM to perform a second level analysis in experimental data and visualizing the results

More specifically:

A sample of n=16 visual activity fMRIs are downloaded.

2. An unpaired, two-sample t-test is applied to the brain maps in order to see the effect of the contrast difference across subjects.

3. A paired, two-sample t-test is applied to the brain maps in order to see the effect of the contrast difference across subjects, considering subject intercepts

The contrast is between responses to retinotopically distinct vertical versus horizontal checkerboards. At the individual level, these stimuli are sometimes used to map the borders of primary visual areas. At the group level, such a mapping is not possible. Yet, we may observe some significant effects in these areas.

import pandas as pd

from nilearn.datasets import fetch_localizer_contrasts

from nilearn.plotting import plot_design_matrix, plot_glass_brain, show

Fetch dataset¶

We download a list of left vs right button press contrasts from a localizer dataset.

n_subjects = 16

sample_vertical = fetch_localizer_contrasts(

["vertical checkerboard"],

n_subjects,

)

sample_horizontal = fetch_localizer_contrasts(

["horizontal checkerboard"],

n_subjects,

)

# Implicitly, there is a one-to-one correspondence between the two samples:

# the first image of both samples comes from subject S1,

# the second from subject S2 etc.

[fetch_localizer_contrasts] Dataset found in

/home/runner/nilearn_data/brainomics_localizer

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27c2c41c5b4a001d9f4e7e/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27d3c3114a42001804500a/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27e5fa1c5b4a001aa09681/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27f18945253a00193cb2dd/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d2808401c5b4a001d9f83b2/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d2811fba26b340017085492/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d282b2345253a001c3e7d09/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28318445253a00193ce6d7/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d2848581c5b4a001aa10aac/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28545ca26b340018089ba7/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d285cd945253a001a3c8509/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d286e49114a42001904ab90/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d288af11c5b4a001d9ff0cb/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d289be945253a001c3ef5e2/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28a1c91c5b4a001da00bd9/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28bb90a26b3400190925d2/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Dataset found in

/home/runner/nilearn_data/brainomics_localizer

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27ccde1c5b4a001d9f5602/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27d9c6114a420019045370/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27de38a26b340016099771/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d27fb651c5b4a001d9f7938/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d280057a26b340019089965/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d2814d145253a001c3e6404/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28244745253a001b3c4afa/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28309645253a001a3c6a8d/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d284a3445253a001c3ea2d1/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28564b1c5b4a001d9fc9d6/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d285b6c1c5b4a001c9edada/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28765645253a001b3c8106/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d287eeb45253a001c3ed1ba/ ...

[fetch_localizer_contrasts] ...done. (2 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d2896fb45253a001a3cabe0/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28af541c5b4a001da01caa/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

[fetch_localizer_contrasts] Downloading data from

https://osf.io/download/5d28b9af45253a001a3ccb85/ ...

[fetch_localizer_contrasts] ...done. (1 seconds, 0 min)

Estimate second level models¶

We define the input maps and the design matrix for the second level model and fit it.

second_level_input = sample_vertical["cmaps"] + sample_horizontal["cmaps"]

Next, we model the effect of conditions (sample 1 vs sample 2).

import numpy as np

condition_effect = np.hstack(([1] * n_subjects, [0] * n_subjects))

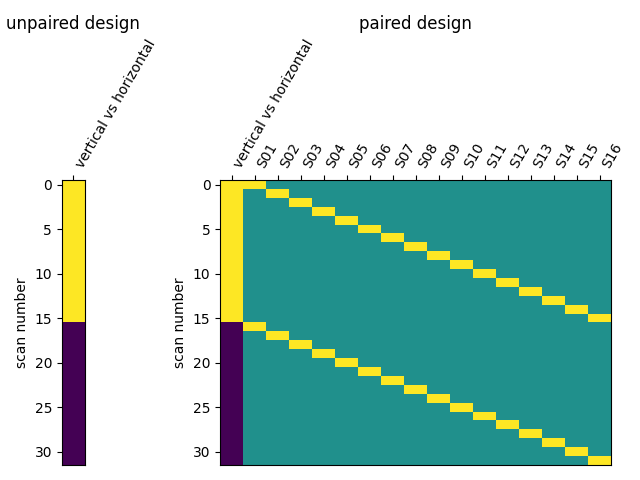

The design matrix for the unpaired test needs to add an intercept, For the paired test, we include an intercept for each subject.

subject_effect = np.vstack((np.eye(n_subjects), np.eye(n_subjects)))

subjects = [f"S{i:02d}" for i in range(1, n_subjects + 1)]

We then assemble those into design matrices

unpaired_design_matrix = pd.DataFrame(

{

"vertical vs horizontal": condition_effect,

"intercept": 1,

}

)

paired_design_matrix = pd.DataFrame(

np.hstack((condition_effect[:, np.newaxis], subject_effect)),

columns=["vertical vs horizontal", *subjects],

)

and plot the designs.

import matplotlib.pyplot as plt

_, (ax_unpaired, ax_paired) = plt.subplots(

1,

2,

gridspec_kw={"width_ratios": [1, 17]},

constrained_layout=True,

)

plot_design_matrix(unpaired_design_matrix, rescale=False, axes=ax_unpaired)

plot_design_matrix(paired_design_matrix, rescale=False, axes=ax_paired)

ax_unpaired.set_title("unpaired design", fontsize=12)

ax_paired.set_title("paired design", fontsize=12)

show()

We specify the analysis models and fit them.

from nilearn.glm.second_level import SecondLevelModel

second_level_model_unpaired = SecondLevelModel(n_jobs=2, verbose=1).fit(

second_level_input, design_matrix=unpaired_design_matrix

)

second_level_model_paired = SecondLevelModel(n_jobs=2, verbose=1).fit(

second_level_input, design_matrix=paired_design_matrix

)

[SecondLevelModel.fit] Fitting second level model. Take a deep breath.

[SecondLevelModel.fit] Loading data from <nibabel.nifti1.Nifti1Image object at

0x7fae022341f0>

[SecondLevelModel.fit] Computing mask

[SecondLevelModel.fit] Resampling mask

[SecondLevelModel.fit] Finished fit

[SecondLevelModel.fit]

Computation of second level model done in 0.35 seconds.

[SecondLevelModel.fit] Fitting second level model. Take a deep breath.

[SecondLevelModel.fit] Loading data from <nibabel.nifti1.Nifti1Image object at

0x7fae098127a0>

[SecondLevelModel.fit] Computing mask

[SecondLevelModel.fit] Resampling mask

[SecondLevelModel.fit] Finished fit

[SecondLevelModel.fit]

Computation of second level model done in 0.34 seconds.

Estimating the contrast is simple. To do so, we provide the column name of the design matrix. The argument ‘output_type’ is set to return all available outputs so that we can compare differences in the effect size, variance, and z-score.

stat_maps_unpaired = second_level_model_unpaired.compute_contrast(

"vertical vs horizontal", output_type="all"

)

stat_maps_paired = second_level_model_paired.compute_contrast(

"vertical vs horizontal", output_type="all"

)

[SecondLevelModel.compute_contrast] Loading data from

<nibabel.nifti1.Nifti1Image object at 0x7fae092b1780>

[SecondLevelModel.compute_contrast] Extracting region signals

[SecondLevelModel.compute_contrast] Cleaning extracted signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Loading data from

<nibabel.nifti1.Nifti1Image object at 0x7fae098115d0>

[SecondLevelModel.compute_contrast] Extracting region signals

[SecondLevelModel.compute_contrast] Cleaning extracted signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

[SecondLevelModel.compute_contrast] Computing image from signals

Plot the results¶

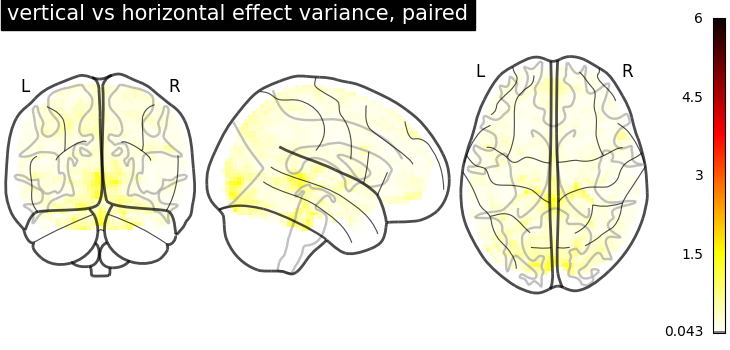

The two ‘effect_size’ images are essentially identical.

(

stat_maps_unpaired["effect_size"].get_fdata()

- stat_maps_paired["effect_size"].get_fdata()

).max()

np.float64(2.220446049250313e-15)

But the variance in the unpaired image is larger.

plot_glass_brain(

stat_maps_unpaired["effect_variance"],

vmin=0,

vmax=6,

cmap="inferno",

title="vertical vs horizontal effect variance, unpaired",

)

plot_glass_brain(

stat_maps_paired["effect_variance"],

vmin=0,

vmax=6,

cmap="inferno",

title="vertical vs horizontal effect variance, paired",

)

show()

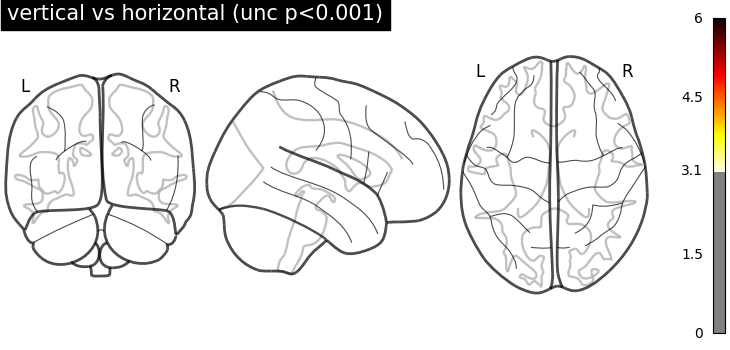

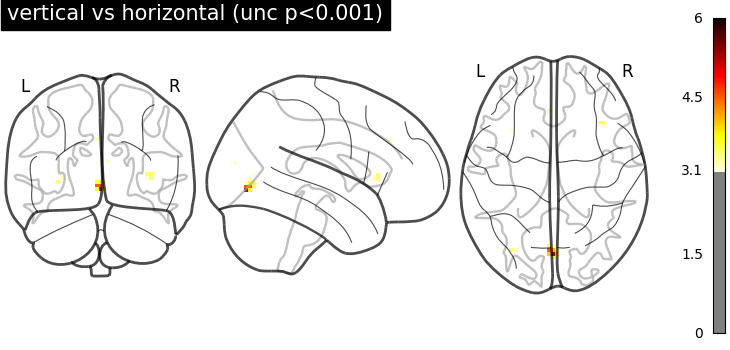

Together, this makes the z_scores from the paired test larger. We threshold the second level contrast and plot it.

threshold = 3.1 # corresponds to p < .001, uncorrected

plot_glass_brain(

stat_maps_unpaired["z_score"],

threshold=threshold,

plot_abs=False,

vmax=5.8,

title="vertical vs horizontal (unc p<0.001), unpaired",

)

plot_glass_brain(

stat_maps_paired["z_score"],

threshold=threshold,

plot_abs=False,

vmax=5.8,

title="vertical vs horizontal (unc p<0.001), paired",

)

show()

Unsurprisingly, we see activity in the primary visual cortex, both positive and negative.

Total running time of the script: (1 minutes 13.857 seconds)

Estimated memory usage: 107 MB