Note

Go to the end to download the full example code or to run this example in your browser via Binder.

Voxel-Based Morphometry on Oasis dataset with Space-Net prior¶

Predicting age from gray-matter concentration maps from OASIS dataset. Note that age is a continuous variable, we use the regressor here, and not the classification object.

See also

The documentation: SpaceNet: decoding with spatial structure for better maps.

For more information see the dataset description.

Load the Oasis VBM dataset¶

import numpy as np

from nilearn import datasets

n_subjects = 200

dataset_files = datasets.fetch_oasis_vbm(n_subjects=n_subjects)

age = dataset_files.ext_vars["age"].astype(float)

age = np.array(age)

gm_imgs = np.array(dataset_files.gray_matter_maps)

# Split data into training set and test set

from sklearn.model_selection import train_test_split

from sklearn.utils import check_random_state

rng = check_random_state(42)

gm_imgs_train, gm_imgs_test, age_train, age_test = train_test_split(

gm_imgs, age, train_size=0.6, random_state=rng

)

# Sort test data for better visualization (trend, etc.)

perm = np.argsort(age_test)[::-1]

age_test = age_test[perm]

gm_imgs_test = gm_imgs_test[perm]

[fetch_oasis_vbm] Dataset found in /home/runner/nilearn_data/oasis1

Fit the SpaceNet and predict with it¶

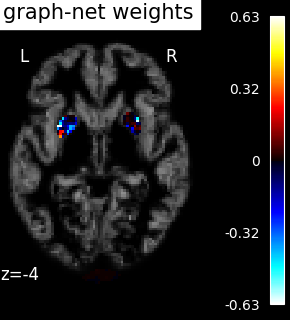

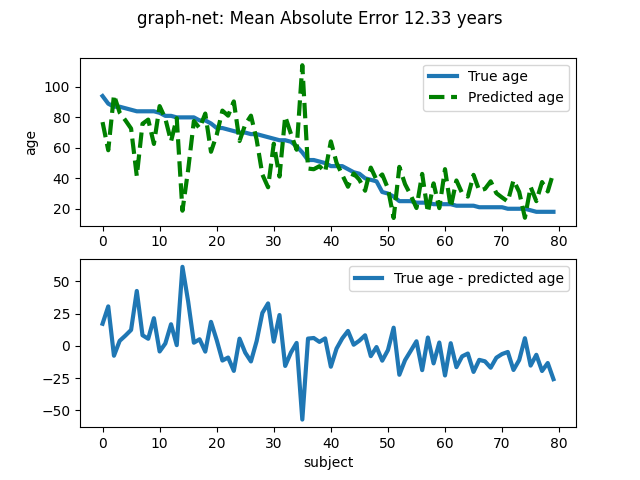

To save time (because these are anat images with many voxels), we include only the 5-percent voxels most correlated with the age variable to fit. Also, we set memory_level=2 so that more of the intermediate computations are cached. We used a graph-net penalty here but more beautiful results can be obtained using the TV-l1 penalty, at the expense of longer runtimes. Also, you may pass and n_jobs=<some_high_value> to the SpaceNetRegressor class, to take advantage of a multi-core system.

from nilearn.decoding import SpaceNetRegressor

decoder = SpaceNetRegressor(

memory="nilearn_cache",

penalty="graph-net",

screening_percentile=5.0,

memory_level=2,

n_jobs=2,

verbose=1,

)

decoder.fit(gm_imgs_train, age_train) # fit

coef_img = decoder.coef_img_

y_pred = decoder.predict(gm_imgs_test).ravel() # predict

mse = np.mean(np.abs(age_test - y_pred))

print(f"Mean square error (MSE) on the predicted age: {mse:.2f}")

[SpaceNetRegressor.fit] Loading data from

array(['/home/runner/nilearn_data/oasis1/OAS1_0003_MR1/mwrc1OAS1_0003_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0086_MR1/mwrc1OAS1_0086_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0052_MR1/mwrc1OAS1_0052_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0211_MR1/mwrc1OAS1_0211_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0216_MR1/mwrc1OAS1_0216_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0096_MR1/mwrc1OAS1_0096_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0182_MR1/mwrc1OAS1_0182_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0041_MR1/mwrc1OAS1_0041_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0214_MR1/mwrc1OAS1_0214_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0069_MR1/mwrc1OAS1_0069_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0025_MR1/mwrc1OAS1_0025_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0159_MR1/mwrc1OAS1_0159_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0113_MR1/mwrc1OAS1_0113_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0038_MR1/mwrc1OAS1_0038_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0013_MR1/mwrc1OAS1_0013_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0220_MR1/mwrc1OAS1_0220_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0180_MR1/mwrc1OAS1_0180_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0007_MR1/mwrc1OAS1_0007_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0031_MR1/mwrc1OAS1_0031_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0135_MR1/mwrc1OAS1_0135_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0005_MR1/mwrc1OAS1_0005_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0037_MR1/mwrc1OAS1_0037_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0160_MR1/mwrc1OAS1_0160_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0163_MR1/mwrc1OAS1_0163_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0123_MR1/mwrc1OAS1_0123_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0162_MR1/mwrc1OAS1_0162_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0012_MR1/mwrc1OAS1_0012_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0070_MR1/mwrc1OAS1_0070_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0126_MR1/mwrc1OAS1_0126_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0164_MR1/mwrc1OAS1_0164_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0188_MR1/mwrc1OAS1_0188_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0001_MR1/mwrc1OAS1_0001_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0226_MR1/mwrc1OAS1_0226_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0173_MR1/mwrc1OAS1_0173_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0078_MR1/mwrc1OAS1_0078_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0138_MR1/mwrc1OAS1_0138_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0072_MR1/mwrc1OAS1_0072_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0050_MR1/mwrc1OAS1_0050_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0184_MR1/mwrc1OAS1_0184_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0032_MR1/mwrc1OAS1_0032_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0045_MR1/mwrc1OAS1_0045_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0121_MR1/mwrc1OAS1_0121_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0176_MR1/mwrc1OAS1_0176_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0177_MR1/mwrc1OAS1_0177_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0029_MR1/mwrc1OAS1_0029_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0026_MR1/mwrc1OAS1_0026_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0208_MR1/mwrc1OAS1_0208_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0165_MR1/mwrc1OAS1_0165_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0091_MR1/mwrc1OAS1_0091_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0044_MR1/mwrc1OAS1_0044_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0190_MR1/mwrc1OAS1_0190_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0053_MR1/mwrc1OAS1_0053_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0106_MR1/mwrc1OAS1_0106_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0174_MR1/mwrc1OAS1_0174_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0049_MR1/mwrc1OAS1_0049_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0155_MR1/mwrc1OAS1_0155_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0004_MR1/mwrc1OAS1_0004_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0117_MR1/mwrc1OAS1_0117_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0060_MR1/mwrc1OAS1_0060_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0148_MR1/mwrc1OAS1_0148_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0204_MR1/mwrc1OAS1_0204_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0202_MR1/mwrc1OAS1_0202_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0209_MR1/mwrc1OAS1_0209_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0055_MR1/mwrc1OAS1_0055_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0090_MR1/mwrc1OAS1_0090_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0039_MR1/mwrc1OAS1_0039_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0009_MR1/mwrc1OAS1_0009_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0124_MR1/mwrc1OAS1_0124_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0103_MR1/mwrc1OAS1_0103_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0094_MR1/mwrc1OAS1_0094_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0200_MR1/mwrc1OAS1_0200_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0205_MR1/mwrc1OAS1_0205_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0101_MR1/mwrc1OAS1_0101_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0010_MR1/mwrc1OAS1_0010_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0015_MR1/mwrc1OAS1_0015_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0067_MR1/mwrc1OAS1_0067_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0193_MR1/mwrc1OAS1_0193_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0146_MR1/mwrc1OAS1_0146_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0019_MR1/mwrc1OAS1_0019_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0080_MR1/mwrc1OAS1_0080_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0199_MR1/mwrc1OAS1_0199_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0150_MR1/mwrc1OAS1_0150_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0189_MR1/mwrc1OAS1_0189_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0207_MR1/mwrc1OAS1_0207_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0071_MR1/mwrc1OAS1_0071_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0061_MR1/mwrc1OAS1_0061_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0120_MR1/mwrc1OAS1_0120_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0056_MR1/mwrc1OAS1_0056_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0223_MR1/mwrc1OAS1_0223_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0065_MR1/mwrc1OAS1_0065_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0054_MR1/mwrc1OAS1_0054_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0099_MR1/mwrc1OAS1_0099_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0023_MR1/mwrc1OAS1_0023_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0064_MR1/mwrc1OAS1_0064_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0181_MR1/mwrc1OAS1_0181_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0217_MR1/mwrc1OAS1_0217_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0144_MR1/mwrc1OAS1_0144_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0042_MR1/mwrc1OAS1_0042_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0178_MR1/mwrc1OAS1_0178_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0218_MR1/mwrc1OAS1_0218_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0002_MR1/mwrc1OAS1_0002_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0059_MR1/mwrc1OAS1_0059_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0167_MR1/mwrc1OAS1_0167_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0145_MR1/mwrc1OAS1_0145_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0169_MR1/mwrc1OAS1_0169_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0115_MR1/mwrc1OAS1_0115_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0111_MR1/mwrc1OAS1_0111_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0131_MR1/mwrc1OAS1_0131_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0098_MR1/mwrc1OAS1_0098_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0082_MR1/mwrc1OAS1_0082_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0136_MR1/mwrc1OAS1_0136_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0227_MR1/mwrc1OAS1_0227_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0022_MR1/mwrc1OAS1_0022_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0212_MR1/mwrc1OAS1_0212_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0079_MR1/mwrc1OAS1_0079_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0119_MR1/mwrc1OAS1_0119_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0016_MR1/mwrc1OAS1_0016_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0104_MR1/mwrc1OAS1_0104_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0203_MR1/mwrc1OAS1_0203_MR1_mpr_an

on_fslswapdim_bet.nii.gz',

'/home/runner/nilearn_data/oasis1/OAS1_0114_MR1/mwrc1OAS1_0114_MR1_mpr_an

on_fslswapdim_bet.nii.gz'],

dtype='<U96')

[SpaceNetRegressor.fit] Computing mask

[SpaceNetRegressor.fit] Resampling mask

[SpaceNetRegressor.fit] Finished fit

[SpaceNetRegressor.fit] Loading data from <nibabel.nifti1.Nifti1Image object at

0x7f6f41fa4130>

[SpaceNetRegressor.fit] Extracting region signals

[SpaceNetRegressor.fit] Cleaning extracted signals

/home/runner/work/nilearn/nilearn/examples/02_decoding/plot_oasis_vbm_space_net.py:68: MaskWarning:

Brain mask (7221031.999999998 mm^3) is bigger than the standard human brain (1882989.0 mm^3).This object is probably not tuned to be used on such data.

[SpaceNetRegressor.fit] Mask volume = 7.22103e+06mm^3 = 7221.03cm^3

[SpaceNetRegressor.fit] Standard brain volume = 1.88299e+06mm^3

[SpaceNetRegressor.fit] Original screening-percentile: 5

[SpaceNetRegressor.fit] Corrected screening-percentile: 1.30382

[Parallel(n_jobs=2)]: Using backend LokyBackend with 2 concurrent workers.

/home/runner/work/nilearn/nilearn/.tox/doc/lib/python3.10/site-packages/joblib/externals/loky/process_executor.py:782: UserWarning:

A worker stopped while some jobs were given to the executor. This can be caused by a too short worker timeout or by a memory leak.

[Parallel(n_jobs=2)]: Done 8 out of 8 | elapsed: 1.6min finished

[SpaceNetRegressor.fit] Computing image from signals

[SpaceNetRegressor.fit] Time Elapsed: 107.896 seconds.

[SpaceNetRegressor.predict] Loading data from <nibabel.nifti1.Nifti1Image object

at 0x7f6f418ac8e0>

[SpaceNetRegressor.predict] Extracting region signals

/home/runner/work/nilearn/nilearn/.tox/doc/lib/python3.10/site-packages/joblib/memory.py:607: JobLibCollisionWarning:

Cannot detect name collisions for function 'nifti_masker_extractor'

[SpaceNetRegressor.predict] Cleaning extracted signals

Mean square error (MSE) on the predicted age: 12.39

Visualize the decoding maps and quality of predictions¶

import matplotlib.pyplot as plt

from nilearn.plotting import plot_stat_map, show

# weights map

background_img = gm_imgs[0]

plot_stat_map(

coef_img,

background_img,

title="graph-net weights",

display_mode="z",

cut_coords=1,

)

# Plot the prediction errors.

plt.figure()

plt.suptitle(f"graph-net: Mean Absolute Error {mse:.2f} years")

linewidth = 3

ax1 = plt.subplot(211)

ax1.plot(age_test, label="True age", linewidth=linewidth)

ax1.plot(y_pred, "--", c="g", label="Predicted age", linewidth=linewidth)

ax1.set_ylabel("age")

plt.legend(loc="best")

ax2 = plt.subplot(212)

ax2.plot(

age_test - y_pred, label="True age - predicted age", linewidth=linewidth

)

ax2.set_xlabel("subject")

plt.legend(loc="best")

show()

Total running time of the script: (1 minutes 53.842 seconds)

Estimated memory usage: 3000 MB