Note

Go to the end to download the full example code or to run this example in your browser via Binder.

Functional connectivity predicts age group¶

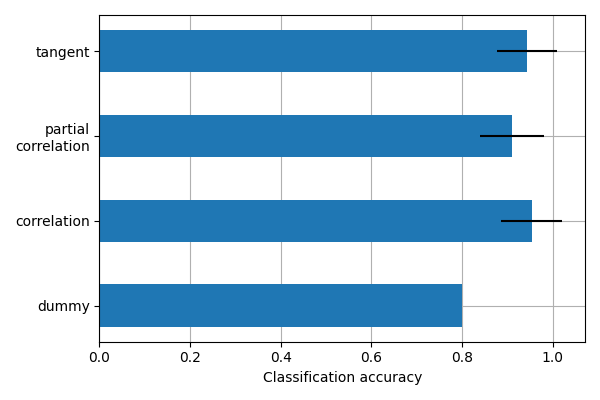

This example compares different kinds of functional connectivity between regions of interest : correlation, partial correlation, and tangent space embedding.

The resulting connectivity coefficients can be used to discriminate children from adults. In general, the tangent space embedding outperforms the standard correlations: see Dadi et al.[1] for a careful study.

Warning

If you are using Nilearn with a version older than 0.9.0,

then you should either upgrade your version or import maskers

from the input_data module instead of the maskers module.

That is, you should manually replace in the following example all occurrences of:

from nilearn.maskers import NiftiMasker

with:

from nilearn.input_data import NiftiMasker

try:

import matplotlib.pyplot as plt

except ImportError as e:

raise RuntimeError("This script needs the matplotlib library") from e

Load brain development fMRI dataset and MSDL atlas¶

We study only 60 subjects from the dataset, to save computation time.

from nilearn.datasets import fetch_atlas_msdl, fetch_development_fmri

development_dataset = fetch_development_fmri(n_subjects=60)

[fetch_development_fmri] Dataset found in

/home/runner/nilearn_data/development_fmri

[fetch_development_fmri] Dataset found in

/home/runner/nilearn_data/development_fmri/development_fmri

[fetch_development_fmri] Dataset found in

/home/runner/nilearn_data/development_fmri/development_fmri

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f72286e80019c3c1af/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f92286e80018c3e463/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f6a743a90017608171/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f64712b400183b70d8/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3eb2286e80019c3c198/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3ed2286e80017c41b56/ ...

[fetch_development_fmri] ...done. (4 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3ee2286e80016c3c379/ ...

[fetch_development_fmri] ...done. (4 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3ee4712b400183b70c3/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3efa743a9001660a0d5/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f14712b4001a3b560e/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f1a743a90017608164/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f12286e80016c3c37e/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f34712b4001a3b5612/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3f7a743a90019606cdf/ ...

[fetch_development_fmri] ...done. (4 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb470153992690018133d3b/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e793992690017108eb9/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb47038353c5800199ac9a2/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e85a3bc97001aeff750/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4701c3992690018133d49/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e1c3992690018133a9e/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39aa743a900176080bf/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39d4712b400193b5b89/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4703039926900160f6b3e/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e4d353c58001b9cb325/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4700af2be3c0017056f69/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e0cf2be3c001801f757/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4702b39926900171090e4/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e35f2be3c00190305ff/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a12286e80017c41a48/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a12286e80016c3c2fc/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4701ff2be3c0017056fad/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e0339926900160f6930/ ...

[fetch_development_fmri] ...done. (4 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39fa743a90018606e2f/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a34712b4001a3b55a3/ ...

[fetch_development_fmri] ...done. (4 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4703439926900160f6b43/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e40f2be3c001801f77f/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a34712b400193b5b92/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a84712b400183b7048/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39aa743a90018606e21/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39aa743a900176080ba/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a72286e80017c41a54/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a7a743a90018606e42/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4702639926900190faf1d/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e3f353c5800199ac787/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff39ca743a90019606c50/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3a2a743a9001660a048/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb47020f2be3c0019030968/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e6f353c58001a9b3311/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb47016a3bc970018f1fc88/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e6ba3bc970019f07152/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff395a743a900176080af/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3964712b400193b5b7d/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb47057f2be3c0019030a1f/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e63f2be3c0017056ba9/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb4704af2be3c001705703b/ ...

[fetch_development_fmri] ...done. (3 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5cb46e7a353c58001a9b3324/ ...

[fetch_development_fmri] ...done. (5 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3952286e80016c3c2e7/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3954712b400193b5b79/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff399a743a9001660a031/ ...

[fetch_development_fmri] ...done. (1 seconds, 0 min)

[fetch_development_fmri] Downloading data from

https://osf.io/download/5c8ff3982286e80017c41a29/ ...

[fetch_development_fmri] ...done. (2 seconds, 0 min)

We use probabilistic regions of interest (ROIs) from the MSDL atlas.

from nilearn.maskers import NiftiMapsMasker

msdl_data = fetch_atlas_msdl()

msdl_coords = msdl_data.region_coords

masker = NiftiMapsMasker(

msdl_data.maps,

resampling_target="data",

t_r=development_dataset.t_r,

detrend=True,

low_pass=0.1,

high_pass=0.01,

memory="nilearn_cache",

memory_level=1,

standardize="zscore_sample",

standardize_confounds=True,

verbose=1,

)

masked_data = list(

map(

masker.fit_transform,

development_dataset.func,

development_dataset.confounds,

)

)

[fetch_atlas_msdl] Dataset found in /home/runner/nilearn_data/msdl_atlas

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar135_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ef02f3af0>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar135_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.2s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar123_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1eb74c1090>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar123_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar124_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee857c6d0>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar124_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar125_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1f0cfe1a20>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar125_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar126_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar126_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar127_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar127_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar134_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar134_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar129_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar129_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar130_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar130_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar131_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar131_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar132_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece503460>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar132_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar133_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar133_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar128_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece503460>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar128_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar032_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1f0cfe27a0>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar032_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar033_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1f0cfe27a0>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar033_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar034_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar034_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar035_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar035_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar036_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar036_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar037_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee857c340>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar037_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar038_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar038_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar041_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar041_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar040_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1f0cfe1a20>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar040_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar042_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar042_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar043_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar043_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar044_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece503460>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar044_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar031_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar031_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar046_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar046_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar047_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee857c160>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar047_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar039_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar039_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar045_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1eb74c2ec0>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar045_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar001_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece503460>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar001_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar028_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece501870>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar028_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar002_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar002_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar003_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee857c910>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar003_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar004_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar004_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.0s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar005_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee862b040>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar005_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar006_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ee8466e30>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar006_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions

[NiftiMapsMasker.wrapped] Finished fit

________________________________________________________________________________

[Memory] Calling nilearn.maskers.base_masker.filter_and_extract...

filter_and_extract('/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar007_task-pixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz',

<nilearn.maskers.nifti_maps_masker._ExtractionFunctor object at 0x7f1ece503460>, { 'allow_overlap': True,

'clean_args': None,

'clean_kwargs': {},

'cmap': 'CMRmap_r',

'detrend': True,

'dtype': None,

'high_pass': 0.01,

'high_variance_confounds': False,

'keep_masked_maps': False,

'low_pass': 0.1,

'maps_img': '/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii',

'mask_img': None,

'reports': True,

'smoothing_fwhm': None,

'standardize': 'zscore_sample',

'standardize_confounds': True,

't_r': 2,

'target_affine': None,

'target_shape': None}, confounds=None, sample_mask=None, dtype=None, memory=Memory(location=nilearn_cache/joblib), memory_level=1, verbose=1, sklearn_output_config=None)

[NiftiMapsMasker.wrapped] Loading data from

'/home/runner/nilearn_data/development_fmri/development_fmri/sub-pixar007_task-p

ixar_space-MNI152NLin2009cAsym_desc-preproc_bold.nii.gz'

[NiftiMapsMasker.wrapped] Extracting region signals

[NiftiMapsMasker.wrapped] Cleaning extracted signals

_______________________________________________filter_and_extract - 1.1s, 0.0min

[NiftiMapsMasker.wrapped] Loading regions from

'/home/runner/nilearn_data/msdl_atlas/MSDL_rois/msdl_rois.nii'

[NiftiMapsMasker.wrapped] Resampling regions