Note

This page is a reference documentation. It only explains the class signature, and not how to use it. Please refer to the user guide for the big picture.

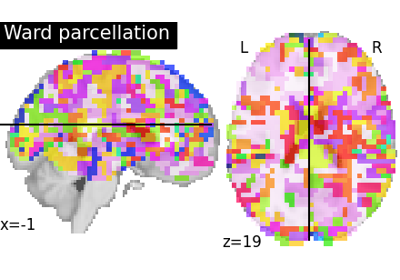

8.8.8. nilearn.regions.Parcellations¶

- class nilearn.regions.Parcellations(method, n_parcels=50, random_state=0, mask=None, smoothing_fwhm=4.0, standardize=False, detrend=False, low_pass=None, high_pass=None, t_r=None, target_affine=None, target_shape=None, mask_strategy='epi', mask_args=None, scaling=False, n_iter=10, memory=Memory(location=None), memory_level=0, n_jobs=1, verbose=1)[source]¶

Learn parcellations on fMRI images.

Five different types of clustering methods can be used: kmeans, ward, complete, average and rena. kmeans will call MiniBatchKMeans whereas ward, complete, average are used within in Agglomerative Clustering and rena will call ReNA. kmeans, ward, complete, average are leveraged from scikit-learn. rena is buit into nilearn.

New in version 0.4.1.

- Parameters

- methodstr, {‘kmeans’, ‘ward’, ‘complete’, ‘average’, ‘rena’}

A method to choose between for brain parcellations. For a small number of parcels, kmeans is usually advisable. For a large number of parcellations (several hundreds, or thousands), ward and rena are the best options. Ward will give higher quality parcels, but with increased computation time. ReNA is most useful as a fast data-reduction step, typically dividing the signal size by ten.

- n_parcelsint, optional

Number of parcellations to divide the brain data into. Default=50.

- random_stateint or RandomState, optional

Pseudo number generator state used for random sampling. Default=0.

- maskNiimg-like object or NiftiMasker, MultiNiftiMasker instance, optional

Mask/Masker used for masking the data. If mask image if provided, it will be used in the MultiNiftiMasker. If an instance of MultiNiftiMasker is provided, then this instance parameters will be used in masking the data by overriding the default masker parameters. If None, mask will be automatically computed by a MultiNiftiMasker with default parameters.

- smoothing_fwhmfloat, optional

If smoothing_fwhm is not None, it gives the full-width half maximum in millimeters of the spatial smoothing to apply to the signal. Default=4.0.

- standardizeboolean, optional

If standardize is True, the time-series are centered and normed: their mean is put to 0 and their variance to 1 in the time dimension. Default=False.

- detrendboolean, optional

Whether to detrend signals or not. This parameter is passed to signal.clean. Please see the related documentation for details. Default=False.

- low_passNone or float, optional

This parameter is passed to signal.clean. Please see the related documentation for details.

- high_passNone or float, optional

This parameter is passed to signal.clean. Please see the related documentation for details.

- t_rfloat, optional

This parameter is passed to signal.clean. Please see the related documentation for details.

- target_affine3x3 or 4x4 matrix, optional

This parameter is passed to image.resample_img. Please see the related documentation for details. The given affine will be considered as same for all given list of images.

- target_shape3-tuple of integers, optional

This parameter is passed to image.resample_img. Please see the related documentation for details.

- mask_strategy: {‘epi’, ‘background’, or ‘template’}, optional

The strategy used to compute the mask: use ‘background’ if your images present a clear homogeneous background, ‘epi’ if they are raw EPI images, or you could use ‘template’ which will extract the gray matter part of your data by resampling the MNI152 brain mask for your data’s field of view. Depending on this value, the mask will be computed from masking.compute_background_mask, masking.compute_epi_mask or masking.compute_brain_mask. Default=’epi’.

- mask_argsdict, optional

If mask is None, these are additional parameters passed to masking.compute_background_mask or masking.compute_epi_mask to fine-tune mask computation. Please see the related documentation for details.

- scalingbool, optional

Used only when the method selected is ‘rena’. If scaling is True, each cluster is scaled by the square root of its size, preserving the l2-norm of the image. Default=False.

- n_iterint, optional

Used only when the method selected is ‘rena’. Number of iterations of the recursive neighbor agglomeration. Default=10.

- memoryinstance of joblib.Memory or str, optional

Used to cache the masking process. By default, no caching is done. If a string is given, it is the path to the caching directory.

- memory_levelinteger, optional

Rough estimator of the amount of memory used by caching. Higher value means more memory for caching. Default=0.

- n_jobsinteger, optional

The number of CPUs to use to do the computation. -1 means ‘all CPUs’, -2 ‘all CPUs but one’, and so on. Default=1.

- verboseinteger, optional

Indicate the level of verbosity. By default, nothing is printed. Default=0.

Notes

Transforming list of Nifti images to data matrix takes few steps. Reducing the data dimensionality using randomized SVD, build brain parcellations using KMeans or various Agglomerative methods.

This object uses spatially-constrained AgglomerativeClustering for method=’ward’ or ‘complete’ or ‘average’ and spatially-constrained ReNA clustering for method=’rena’. Spatial connectivity matrix (voxel-to-voxel) is built-in object which means no need of explicitly giving the matrix.

- Attributes

- `labels_img_`Nifti1Image

Labels image to each parcellation learned on fmri images.

- `masker_`instance of NiftiMasker or MultiNiftiMasker

The masker used to mask the data.

- `connectivity_`numpy.ndarray

Voxel-to-voxel connectivity matrix computed from a mask. Note that this attribute is only seen if selected methods are Agglomerative Clustering type, ‘ward’, ‘complete’, ‘average’.

- __init__(method, n_parcels=50, random_state=0, mask=None, smoothing_fwhm=4.0, standardize=False, detrend=False, low_pass=None, high_pass=None, t_r=None, target_affine=None, target_shape=None, mask_strategy='epi', mask_args=None, scaling=False, n_iter=10, memory=Memory(location=None), memory_level=0, n_jobs=1, verbose=1)[source]¶

Initialize self. See help(type(self)) for accurate signature.

- VALID_METHODS = ['kmeans', 'ward', 'complete', 'average', 'rena']¶

- transform(imgs, confounds=None)[source]¶

Extract signals from parcellations learned on fmri images.

- Parameters

- imgsList of Nifti-like images

See http://nilearn.github.io/manipulating_images/input_output.html. Images to process.

- confoundsList of CSV files, arrays-like or pandas DataFrame, optional

Each file or numpy array in a list should have shape (number of scans, number of confounds) This parameter is passed to signal.clean. Please see the related documentation for details. Must be of same length of imgs.

- Returns

- region_signalsList of or 2D numpy.ndarray

Signals extracted for each label for each image. Example, for single image shape will be (number of scans, number of labels)

- fit_transform(imgs, confounds=None)[source]¶

Fit the images to parcellations and then transform them.

- Parameters

- imgsList of Nifti-like images

See http://nilearn.github.io/manipulating_images/input_output.html. Images for process for fit as well for transform to signals.

- confoundsList of CSV files, arrays-like or pandas DataFrame, optional

Each file or numpy array in a list should have shape (number of scans, number of confounds). This parameter is passed to signal.clean. Given confounds should have same length as images if given as a list.

Note: same confounds will used for cleaning signals before learning parcellations.

- Returns

- region_signalsList of or 2D numpy.ndarray

Signals extracted for each label for each image. Example, for single image shape will be (number of scans, number of labels)

- inverse_transform(signals)[source]¶

Transform signals extracted from parcellations back to brain images.

Uses labels_img_ (parcellations) built at fit() level.

- Parameters

- signalsList of 2D numpy.ndarray

Each 2D array with shape (number of scans, number of regions).

- Returns

- imgsList of or Nifti-like image

Brain image(s).

- fit(imgs, y=None, confounds=None)[source]¶

Compute the mask and the components across subjects

- Parameters

- imgslist of Niimg-like objects

See http://nilearn.github.io/manipulating_images/input_output.html Data on which the mask is calculated. If this is a list, the affine is considered the same for all.

- confoundslist of CSV file paths or numpy.ndarrays or pandas DataFrames, optional

This parameter is passed to nilearn.signal.clean. Please see the related documentation for details. Should match with the list of imgs given.

- Returns

- selfobject

Returns the instance itself. Contains attributes listed at the object level.

- get_params(deep=True)¶

Get parameters for this estimator.

- Parameters

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns

- paramsdict

Parameter names mapped to their values.

- score(imgs, confounds=None, per_component=False)[source]¶

Score function based on explained variance on imgs.

Should only be used by DecompositionEstimator derived classes

- Parameters

- imgsiterable of Niimg-like objects

See http://nilearn.github.io/manipulating_images/input_output.html Data to be scored

- confoundsCSV file path or numpy.ndarray or pandas DataFrame, optional

This parameter is passed to nilearn.signal.clean. Please see the related documentation for details

- per_componentbool, optional

Specify whether the explained variance ratio is desired for each map or for the global set of components. Default=False.

- Returns

- scorefloat

Holds the score for each subjects. Score is two dimensional if per_component is True. First dimension is squeezed if the number of subjects is one

- set_params(**params)¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters

- **paramsdict

Estimator parameters.

- Returns

- selfestimator instance

Estimator instance.