Note

This page is a reference documentation. It only explains the function signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.datasets.fetch_haxby#

- nilearn.datasets.fetch_haxby(data_dir=None, subjects=(2,), fetch_stimuli=False, url=None, resume=True, verbose=1)[source]#

Download and loads complete haxby dataset.

See Haxby et al.[1].

- Parameters:

- data_dir

pathlib.Pathorstr, optional Path where data should be downloaded. By default, files are downloaded in a

nilearn_datafolder in the home directory of the user. See alsonilearn.datasets.utils.get_data_dirs.- subjectslist or int, default=(2,)

Either a list of subjects or the number of subjects to load, from 1 to 6. By default, 2nd subject will be loaded. Empty list returns no subject data.

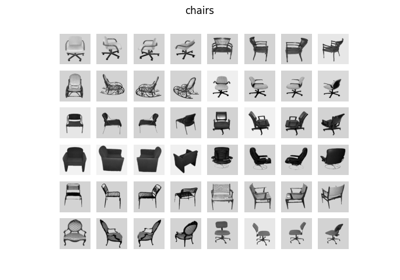

- fetch_stimuliboolean, default=False

Indicate if stimuli images must be downloaded. They will be presented as a dictionary of categories.

- url

str, default=None URL of file to download. Override download URL. Used for test only (or if you setup a mirror of the data).

- resume

bool, default=True Whether to resume download of a partly-downloaded file.

- verbose

int, default=1 Verbosity level (0 means no message).

- data_dir

- Returns:

- datasklearn.datasets.base.Bunch

Dictionary-like object, the interest attributes are :

‘session_target’:

listofstr. Paths to text file containing run and target data.‘mask’:

str. Path to fullbrain mask file.‘mask_vt’:

listofstr. Paths to nifti ventral temporal mask file.‘mask_face’:

listofstr. Paths to nifti with face-reponsive brain regions.‘mask_face_little’:

listofstr. Spatially more constrained version of the above.‘mask_house’:

listofstr. Paths to nifti with house-reponsive brain regions.‘mask_house_little’:

listofstr. Spatially more constrained version of the above.

Notes

PyMVPA provides a tutorial making use of this dataset: http://www.pymvpa.org/tutorial.html

More information about its structure: http://dev.pymvpa.org/datadb/haxby2001.html

See additional information <https://www.science.org/doi/10.1126/science.1063736>

Run 8 in subject 5 does not contain any task labels. The anatomical image for subject 6 is unavailable.

References

Examples using nilearn.datasets.fetch_haxby#

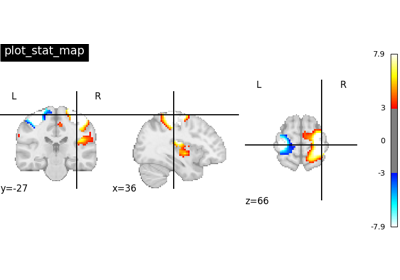

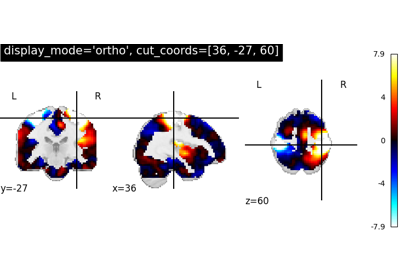

Decoding with FREM: face vs house vs chair object recognition

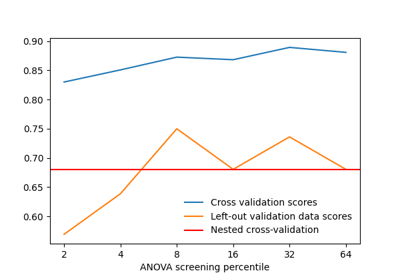

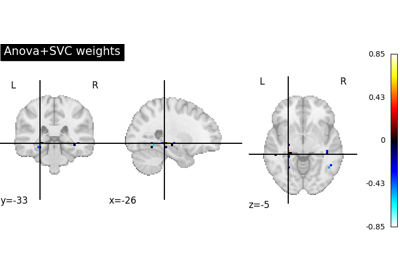

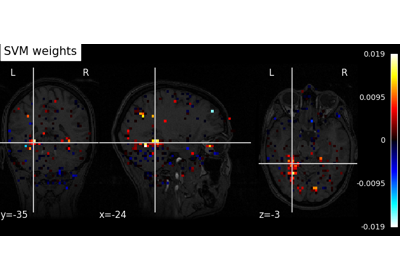

Decoding with ANOVA + SVM: face vs house in the Haxby dataset

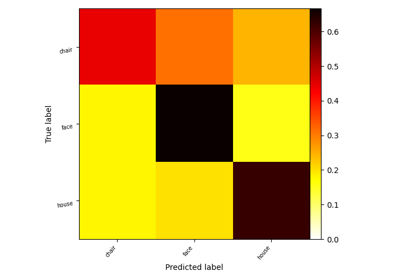

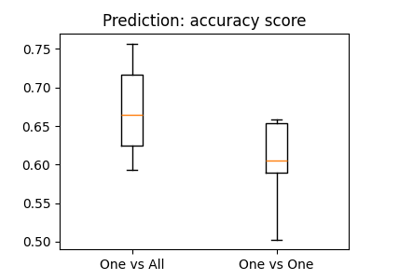

The haxby dataset: different multi-class strategies

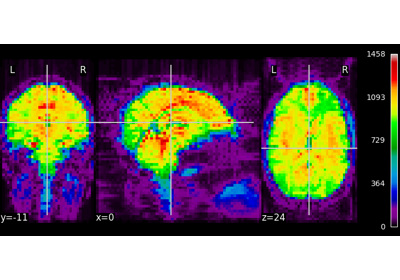

Decoding of a dataset after GLM fit for signal extraction

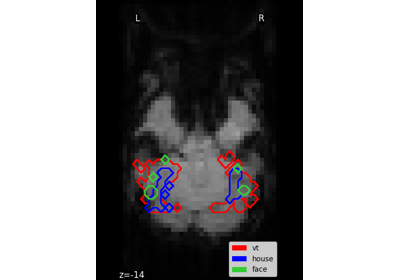

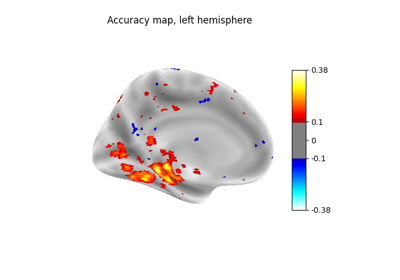

ROI-based decoding analysis in Haxby et al. dataset

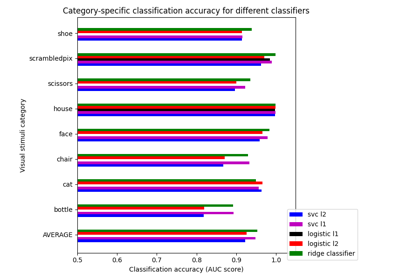

Different classifiers in decoding the Haxby dataset

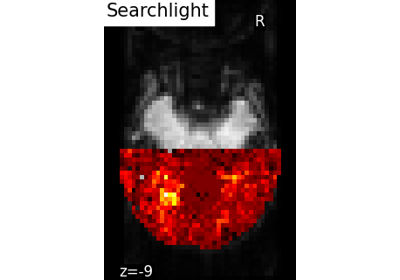

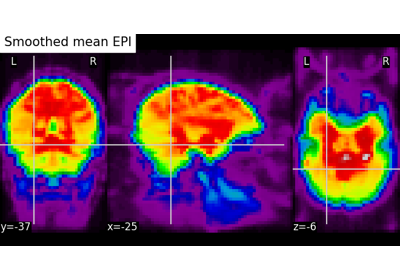

Computing a Region of Interest (ROI) mask manually

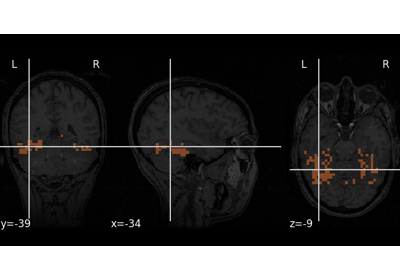

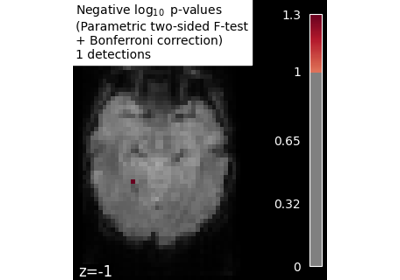

Massively univariate analysis of face vs house recognition