Note

Go to the end to download the full example code or to run this example in your browser via Binder

FREM on Jimura et al “mixed gambles” dataset#

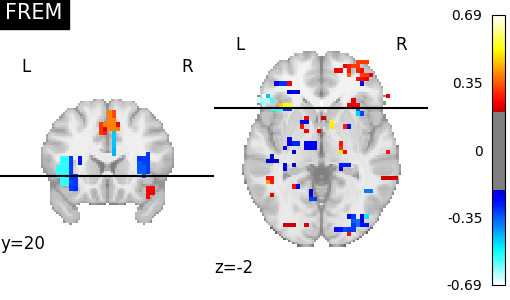

In this example, we use fast ensembling of regularized models (FREM) to solve a regression problem, predicting the gain level corresponding to each beta maps regressed from mixed gambles experiment. FREM uses an implicit spatial regularization through fast clustering and aggregates a high number of estimators trained on various splits of the training set, thus returning a very robust decoder at a lower computational cost than other spatially regularized methods.

To have more details, see: FREM: fast ensembling of regularized models for robust decoding.

Load the data from the Jimura mixed-gamble experiment#

from nilearn.datasets import fetch_mixed_gambles

data = fetch_mixed_gambles(n_subjects=16)

zmap_filenames = data.zmaps

behavioral_target = data.gain

mask_filename = data.mask_img

Dataset created in /home/remi/nilearn_data/jimura_poldrack_2012_zmaps

Downloading data from https://www.nitrc.org/frs/download.php/7229/jimura_poldrack_2012_zmaps.zip ...

Downloaded 868352 of 104293434 bytes (0.8%, 2.0min remaining)

Downloaded 1957888 of 104293434 bytes (1.9%, 1.8min remaining)

Downloaded 3129344 of 104293434 bytes (3.0%, 1.6min remaining)

Downloaded 4292608 of 104293434 bytes (4.1%, 1.6min remaining)

Downloaded 5521408 of 104293434 bytes (5.3%, 1.5min remaining)

Downloaded 6840320 of 104293434 bytes (6.6%, 1.4min remaining)

Downloaded 8101888 of 104293434 bytes (7.8%, 1.4min remaining)

Downloaded 9183232 of 104293434 bytes (8.8%, 1.4min remaining)

Downloaded 10461184 of 104293434 bytes (10.0%, 1.4min remaining)

Downloaded 11812864 of 104293434 bytes (11.3%, 1.3min remaining)

Downloaded 13164544 of 104293434 bytes (12.6%, 1.3min remaining)

Downloaded 14540800 of 104293434 bytes (13.9%, 1.2min remaining)

Downloaded 15515648 of 104293434 bytes (14.9%, 1.3min remaining)

Downloaded 16375808 of 104293434 bytes (15.7%, 1.3min remaining)

Downloaded 17195008 of 104293434 bytes (16.5%, 1.3min remaining)

Downloaded 18055168 of 104293434 bytes (17.3%, 1.3min remaining)

Downloaded 19038208 of 104293434 bytes (18.3%, 1.3min remaining)

Downloaded 20054016 of 104293434 bytes (19.2%, 1.3min remaining)

Downloaded 21168128 of 104293434 bytes (20.3%, 1.3min remaining)

Downloaded 22061056 of 104293434 bytes (21.2%, 1.3min remaining)

Downloaded 23019520 of 104293434 bytes (22.1%, 1.3min remaining)

Downloaded 24100864 of 104293434 bytes (23.1%, 1.2min remaining)

Downloaded 25255936 of 104293434 bytes (24.2%, 1.2min remaining)

Downloaded 26361856 of 104293434 bytes (25.3%, 1.2min remaining)

Downloaded 27541504 of 104293434 bytes (26.4%, 1.2min remaining)

Downloaded 28745728 of 104293434 bytes (27.6%, 1.2min remaining)

Downloaded 30048256 of 104293434 bytes (28.8%, 1.1min remaining)

Downloaded 31522816 of 104293434 bytes (30.2%, 1.1min remaining)

Downloaded 33316864 of 104293434 bytes (31.9%, 1.0min remaining)

Downloaded 35520512 of 104293434 bytes (34.1%, 58.8s remaining)

Downloaded 37249024 of 104293434 bytes (35.7%, 56.5s remaining)

Downloaded 38305792 of 104293434 bytes (36.7%, 55.9s remaining)

Downloaded 39526400 of 104293434 bytes (37.9%, 54.8s remaining)

Downloaded 40566784 of 104293434 bytes (38.9%, 54.2s remaining)

Downloaded 41639936 of 104293434 bytes (39.9%, 53.4s remaining)

Downloaded 42459136 of 104293434 bytes (40.7%, 53.2s remaining)

Downloaded 43417600 of 104293434 bytes (41.6%, 52.6s remaining)

Downloaded 44400640 of 104293434 bytes (42.6%, 52.0s remaining)

Downloaded 45383680 of 104293434 bytes (43.5%, 51.3s remaining)

Downloaded 46243840 of 104293434 bytes (44.3%, 50.9s remaining)

Downloaded 47226880 of 104293434 bytes (45.3%, 50.2s remaining)

Downloaded 48283648 of 104293434 bytes (46.3%, 49.4s remaining)

Downloaded 49364992 of 104293434 bytes (47.3%, 48.5s remaining)

Downloaded 50397184 of 104293434 bytes (48.3%, 47.7s remaining)

Downloaded 51478528 of 104293434 bytes (49.4%, 46.8s remaining)

Downloaded 52633600 of 104293434 bytes (50.5%, 45.8s remaining)

Downloaded 53911552 of 104293434 bytes (51.7%, 44.5s remaining)

Downloaded 55451648 of 104293434 bytes (53.2%, 42.9s remaining)

Downloaded 57401344 of 104293434 bytes (55.0%, 40.6s remaining)

Downloaded 59678720 of 104293434 bytes (57.2%, 37.9s remaining)

Downloaded 61186048 of 104293434 bytes (58.7%, 36.4s remaining)

Downloaded 62308352 of 104293434 bytes (59.7%, 35.5s remaining)

Downloaded 63029248 of 104293434 bytes (60.4%, 35.2s remaining)

Downloaded 63520768 of 104293434 bytes (60.9%, 35.1s remaining)

Downloaded 64077824 of 104293434 bytes (61.4%, 35.0s remaining)

Downloaded 64651264 of 104293434 bytes (62.0%, 34.8s remaining)

Downloaded 65331200 of 104293434 bytes (62.6%, 34.4s remaining)

Downloaded 66052096 of 104293434 bytes (63.3%, 34.0s remaining)

Downloaded 66519040 of 104293434 bytes (63.8%, 34.0s remaining)

Downloaded 67182592 of 104293434 bytes (64.4%, 33.6s remaining)

Downloaded 67616768 of 104293434 bytes (64.8%, 33.6s remaining)

Downloaded 67796992 of 104293434 bytes (65.0%, 33.8s remaining)

Downloaded 68091904 of 104293434 bytes (65.3%, 34.0s remaining)

Downloaded 68354048 of 104293434 bytes (65.5%, 34.2s remaining)

Downloaded 68608000 of 104293434 bytes (65.8%, 34.4s remaining)

Downloaded 68829184 of 104293434 bytes (66.0%, 34.6s remaining)

Downloaded 68927488 of 104293434 bytes (66.1%, 34.9s remaining)

Downloaded 69246976 of 104293434 bytes (66.4%, 35.0s remaining)

Downloaded 69664768 of 104293434 bytes (66.8%, 34.9s remaining)

Downloaded 70148096 of 104293434 bytes (67.3%, 34.6s remaining)

Downloaded 70664192 of 104293434 bytes (67.8%, 34.4s remaining)

Downloaded 71041024 of 104293434 bytes (68.1%, 34.3s remaining)

Downloaded 71434240 of 104293434 bytes (68.5%, 34.2s remaining)

Downloaded 71876608 of 104293434 bytes (68.9%, 34.0s remaining)

Downloaded 72359936 of 104293434 bytes (69.4%, 33.7s remaining)

Downloaded 72867840 of 104293434 bytes (69.9%, 33.3s remaining)

Downloaded 73498624 of 104293434 bytes (70.5%, 32.8s remaining)

Downloaded 74186752 of 104293434 bytes (71.1%, 32.2s remaining)

Downloaded 74866688 of 104293434 bytes (71.8%, 31.6s remaining)

Downloaded 75554816 of 104293434 bytes (72.4%, 31.0s remaining)

Downloaded 76300288 of 104293434 bytes (73.2%, 30.3s remaining)

Downloaded 77086720 of 104293434 bytes (73.9%, 29.5s remaining)

Downloaded 77864960 of 104293434 bytes (74.7%, 28.7s remaining)

Downloaded 78659584 of 104293434 bytes (75.4%, 27.9s remaining)

Downloaded 79544320 of 104293434 bytes (76.3%, 26.9s remaining)

Downloaded 80429056 of 104293434 bytes (77.1%, 26.0s remaining)

Downloaded 81534976 of 104293434 bytes (78.2%, 24.7s remaining)

Downloaded 82984960 of 104293434 bytes (79.6%, 23.0s remaining)

Downloaded 84500480 of 104293434 bytes (81.0%, 21.2s remaining)

Downloaded 85925888 of 104293434 bytes (82.4%, 19.6s remaining)

Downloaded 87203840 of 104293434 bytes (83.6%, 18.2s remaining)

Downloaded 88317952 of 104293434 bytes (84.7%, 16.9s remaining)

Downloaded 89497600 of 104293434 bytes (85.8%, 15.7s remaining)

Downloaded 90669056 of 104293434 bytes (86.9%, 14.4s remaining)

Downloaded 91512832 of 104293434 bytes (87.7%, 13.5s remaining)

Downloaded 92545024 of 104293434 bytes (88.7%, 12.4s remaining)

Downloaded 93429760 of 104293434 bytes (89.6%, 11.5s remaining)

Downloaded 94158848 of 104293434 bytes (90.3%, 10.7s remaining)

Downloaded 94830592 of 104293434 bytes (90.9%, 10.1s remaining)

Downloaded 95567872 of 104293434 bytes (91.6%, 9.3s remaining)

Downloaded 96354304 of 104293434 bytes (92.4%, 8.5s remaining)

Downloaded 97189888 of 104293434 bytes (93.2%, 7.6s remaining)

Downloaded 98140160 of 104293434 bytes (94.1%, 6.6s remaining)

Downloaded 99098624 of 104293434 bytes (95.0%, 5.6s remaining)

Downloaded 100261888 of 104293434 bytes (96.1%, 4.3s remaining)

Downloaded 101662720 of 104293434 bytes (97.5%, 2.8s remaining)

Downloaded 102694912 of 104293434 bytes (98.5%, 1.7s remaining)

Downloaded 103432192 of 104293434 bytes (99.2%, 0.9s remaining)

Downloaded 104243200 of 104293434 bytes (100.0%, 0.1s remaining) ...done. (112 seconds, 1 min)

Extracting data from /home/remi/nilearn_data/jimura_poldrack_2012_zmaps/a4c8868ab5c651b8594da6f3204ded3a/jimura_poldrack_2012_zmaps.zip..... done.

Fit FREM#

We compare both of these models to a pipeline ensembling many models

from nilearn.decoding import FREMRegressor

frem = FREMRegressor("svr", cv=10, standardize="zscore_sample")

frem.fit(zmap_filenames, behavioral_target)

# Visualize FREM weights

# ----------------------

from nilearn.plotting import plot_stat_map

plot_stat_map(

frem.coef_img_["beta"],

title="FREM",

display_mode="yz",

cut_coords=[20, -2],

threshold=0.2,

)

/home/remi/github/nilearn/env/lib/python3.11/site-packages/nilearn/_utils/param_validation.py:213: UserWarning:

Brain mask is bigger than the volume of a standard human brain. This object is probably not tuned to be used on such data.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

/home/remi/github/nilearn/env/lib/python3.11/site-packages/sklearn/svm/_base.py:297: ConvergenceWarning:

Solver terminated early (max_iter=10000). Consider pre-processing your data with StandardScaler or MinMaxScaler.

<nilearn.plotting.displays._slicers.YZSlicer object at 0x7fd1b21e3050>

We can observe that the coefficients map learnt by FREM is structured, due to the spatial regularity imposed by working on clusters and model ensembling. Although these maps have been thresholded for display, they are not sparse (i.e. almost all voxels have non-zero coefficients). See also this other example using FREM, and related section of user guide.

Example use of TV-L1 SpaceNet#

SpaceNet is another method available in Nilearn to decode with spatially sparse models. Depending on the penalty that is used, it yields either very structured maps (TV-L1) or unstructured maps (graph_net). Because of their heavy computational costs, these methods are not demonstrated on this example but you can try them easily if you have a few minutes. Example code is included below.

from nilearn.decoding import SpaceNetRegressor

# We use the regressor object since the task is to predict a continuous

# variable (gain of the gamble).

tv_l1 = SpaceNetRegressor(

mask=mask_filename,

penalty="tv-l1",

eps=1e-1, # prefer large alphas

memory="nilearn_cache",

)

# tv_l1.fit(zmap_filenames, behavioral_target)

# plot_stat_map(tv_l1.coef_img_, title="TV-L1", display_mode="yz",

# cut_coords=[20, -2])

Total running time of the script: (2 minutes 34.390 seconds)

Estimated memory usage: 1716 MB