Note

Go to the end to download the full example code or to run this example in your browser via Binder

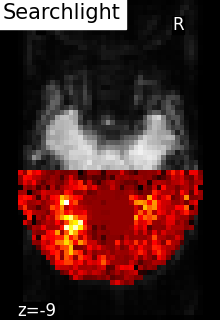

Searchlight analysis of face vs house recognition#

Searchlight analysis requires fitting a classifier a large amount of times. As a result, it is an intrinsically slow method. In order to speed up computing, in this example, Searchlight is run only on one slice on the fMRI (see the generated figures).

Note

If you are using Nilearn with a version older than 0.9.0, then you should either upgrade your version or import maskers from the input_data module instead of the maskers module.

That is, you should manually replace in the following example all occurrences of:

from nilearn.maskers import NiftiMasker

with:

from nilearn.input_data import NiftiMasker

Load Haxby dataset#

import pandas as pd

from nilearn import datasets

from nilearn.image import get_data, load_img, new_img_like

# We fetch 2nd subject from haxby datasets (which is default)

haxby_dataset = datasets.fetch_haxby()

# print basic information on the dataset

print(f"Anatomical nifti image (3D) is located at: {haxby_dataset.mask}")

print(f"Functional nifti image (4D) is located at: {haxby_dataset.func[0]}")

fmri_filename = haxby_dataset.func[0]

labels = pd.read_csv(haxby_dataset.session_target[0], sep=" ")

y = labels["labels"]

session = labels["chunks"]

Anatomical nifti image (3D) is located at: /home/remi/nilearn_data/haxby2001/mask.nii.gz

Functional nifti image (4D) is located at: /home/remi/nilearn_data/haxby2001/subj2/bold.nii.gz

Restrict to faces and houses#

from nilearn.image import index_img

condition_mask = y.isin(["face", "house"])

fmri_img = index_img(fmri_filename, condition_mask)

y, session = y[condition_mask], session[condition_mask]

Prepare masks#

mask_img is the original mask

process_mask_img is a subset of mask_img, it contains the voxels that should be processed (we only keep the slice z = 29 and the back of the brain to speed up computation)

import numpy as np

mask_img = load_img(haxby_dataset.mask)

# .astype() makes a copy.

process_mask = get_data(mask_img).astype(int)

picked_slice = 29

process_mask[..., (picked_slice + 1) :] = 0

process_mask[..., :picked_slice] = 0

process_mask[:, 30:] = 0

process_mask_img = new_img_like(mask_img, process_mask)

/home/remi/github/nilearn/examples/02_decoding/plot_haxby_searchlight.py:61: UserWarning:

Data array used to create a new image contains 64-bit ints. This is likely due to creating the array with numpy and passing `int` as the `dtype`. Many tools such as FSL and SPM cannot deal with int64 in Nifti images, so for compatibility the data has been converted to int32.

Searchlight computation#

# Make processing parallel

# /!\ As each thread will print its progress, n_jobs > 1 could mess up the

# information output.

n_jobs = 1

# Define the cross-validation scheme used for validation.

# Here we use a KFold cross-validation on the session, which corresponds to

# splitting the samples in 4 folds and make 4 runs using each fold as a test

# set once and the others as learning sets

from sklearn.model_selection import KFold

cv = KFold(n_splits=4)

import nilearn.decoding

# The radius is the one of the Searchlight sphere that will scan the volume

searchlight = nilearn.decoding.SearchLight(

mask_img,

process_mask_img=process_mask_img,

radius=5.6,

n_jobs=n_jobs,

verbose=1,

cv=cv,

)

searchlight.fit(fmri_img, y)

/home/remi/github/nilearn/env/lib/python3.11/site-packages/nilearn/image/resampling.py:493: UserWarning:

The provided image has no sform in its header. Please check the provided file. Results may not be as expected.

Job #1, processed 0/739 voxels (0.00%, 394.6805000305176 seconds remaining)

Job #1, processed 10/739 voxels (1.35%, 34.19982921635663 seconds remaining)

Job #1, processed 20/739 voxels (2.71%, 34.32425674565164 seconds remaining)

Job #1, processed 30/739 voxels (4.06%, 35.82048243019969 seconds remaining)

Job #1, processed 40/739 voxels (5.41%, 35.325744743047494 seconds remaining)

Job #1, processed 50/739 voxels (6.77%, 35.15307764006859 seconds remaining)

Job #1, processed 60/739 voxels (8.12%, 34.82167647742286 seconds remaining)

Job #1, processed 70/739 voxels (9.47%, 34.69358360477838 seconds remaining)

Job #1, processed 80/739 voxels (10.83%, 34.383759954079146 seconds remaining)

Job #1, processed 90/739 voxels (12.18%, 33.96018652258248 seconds remaining)

Job #1, processed 100/739 voxels (13.53%, 33.61083063547117 seconds remaining)

Job #1, processed 110/739 voxels (14.88%, 33.20825507051202 seconds remaining)

Job #1, processed 120/739 voxels (16.24%, 32.78989291191102 seconds remaining)

Job #1, processed 130/739 voxels (17.59%, 32.312918256940726 seconds remaining)

Job #1, processed 140/739 voxels (18.94%, 31.876384488128934 seconds remaining)

Job #1, processed 150/739 voxels (20.30%, 31.392492546823814 seconds remaining)

Job #1, processed 160/739 voxels (21.65%, 30.956117873379046 seconds remaining)

Job #1, processed 170/739 voxels (23.00%, 30.475629381511524 seconds remaining)

Job #1, processed 180/739 voxels (24.36%, 29.97595790258574 seconds remaining)

Job #1, processed 190/739 voxels (25.71%, 29.459447584000134 seconds remaining)

Job #1, processed 200/739 voxels (27.06%, 28.96624471836414 seconds remaining)

Job #1, processed 210/739 voxels (28.42%, 28.407312530769918 seconds remaining)

Job #1, processed 220/739 voxels (29.77%, 27.913570028044933 seconds remaining)

Job #1, processed 230/739 voxels (31.12%, 27.373167058802508 seconds remaining)

Job #1, processed 240/739 voxels (32.48%, 26.864236646097872 seconds remaining)

Job #1, processed 250/739 voxels (33.83%, 26.33643079825911 seconds remaining)

Job #1, processed 260/739 voxels (35.18%, 25.85276778114323 seconds remaining)

Job #1, processed 270/739 voxels (36.54%, 25.319397836678917 seconds remaining)

Job #1, processed 280/739 voxels (37.89%, 24.76775706346068 seconds remaining)

Job #1, processed 290/739 voxels (39.24%, 24.238038330146175 seconds remaining)

Job #1, processed 300/739 voxels (40.60%, 23.698341667945748 seconds remaining)

Job #1, processed 310/739 voxels (41.95%, 23.17926028857498 seconds remaining)

Job #1, processed 320/739 voxels (43.30%, 22.637880469579898 seconds remaining)

Job #1, processed 330/739 voxels (44.65%, 22.114281682124467 seconds remaining)

Job #1, processed 340/739 voxels (46.01%, 21.579574473239475 seconds remaining)

Job #1, processed 350/739 voxels (47.36%, 21.1208773335895 seconds remaining)

Job #1, processed 360/739 voxels (48.71%, 20.592186138043044 seconds remaining)

Job #1, processed 370/739 voxels (50.07%, 20.058024949600817 seconds remaining)

Job #1, processed 380/739 voxels (51.42%, 19.52268700338161 seconds remaining)

Job #1, processed 390/739 voxels (52.77%, 18.98019071589461 seconds remaining)

Job #1, processed 400/739 voxels (54.13%, 18.451418633076038 seconds remaining)

Job #1, processed 410/739 voxels (55.48%, 17.902469576909205 seconds remaining)

Job #1, processed 420/739 voxels (56.83%, 17.369069905342318 seconds remaining)

Job #1, processed 430/739 voxels (58.19%, 16.830301906710577 seconds remaining)

Job #1, processed 440/739 voxels (59.54%, 16.28861418529609 seconds remaining)

Job #1, processed 450/739 voxels (60.89%, 15.745893307400955 seconds remaining)

Job #1, processed 460/739 voxels (62.25%, 15.188196071180474 seconds remaining)

Job #1, processed 470/739 voxels (63.60%, 14.643115572959372 seconds remaining)

Job #1, processed 480/739 voxels (64.95%, 14.092821963664841 seconds remaining)

Job #1, processed 490/739 voxels (66.31%, 13.554127044149912 seconds remaining)

Job #1, processed 500/739 voxels (67.66%, 13.007487939865042 seconds remaining)

Job #1, processed 510/739 voxels (69.01%, 12.469213908486667 seconds remaining)

Job #1, processed 520/739 voxels (70.37%, 11.922642923848132 seconds remaining)

Job #1, processed 530/739 voxels (71.72%, 11.382490086409211 seconds remaining)

Job #1, processed 540/739 voxels (73.07%, 10.838600798933557 seconds remaining)

Job #1, processed 550/739 voxels (74.42%, 10.305298511586628 seconds remaining)

Job #1, processed 560/739 voxels (75.78%, 9.77713370858034 seconds remaining)

Job #1, processed 570/739 voxels (77.13%, 9.23812255540371 seconds remaining)

Job #1, processed 580/739 voxels (78.48%, 8.700636695041323 seconds remaining)

Job #1, processed 590/739 voxels (79.84%, 8.158766645228932 seconds remaining)

Job #1, processed 600/739 voxels (81.19%, 7.619109745168939 seconds remaining)

Job #1, processed 610/739 voxels (82.54%, 7.069467388402179 seconds remaining)

Job #1, processed 620/739 voxels (83.90%, 6.5213206527628085 seconds remaining)

Job #1, processed 630/739 voxels (85.25%, 5.974829568191708 seconds remaining)

Job #1, processed 640/739 voxels (86.60%, 5.433205116153041 seconds remaining)

Job #1, processed 650/739 voxels (87.96%, 4.880327299759032 seconds remaining)

Job #1, processed 660/739 voxels (89.31%, 4.333472875234808 seconds remaining)

Job #1, processed 670/739 voxels (90.66%, 3.785630978786892 seconds remaining)

Job #1, processed 680/739 voxels (92.02%, 3.2334104671449273 seconds remaining)

Job #1, processed 690/739 voxels (93.37%, 2.6869610994751 seconds remaining)

Job #1, processed 700/739 voxels (94.72%, 2.1374500901312445 seconds remaining)

Job #1, processed 710/739 voxels (96.08%, 1.585375245663646 seconds remaining)

Job #1, processed 720/739 voxels (97.43%, 1.0387314192558401 seconds remaining)

Job #1, processed 730/739 voxels (98.78%, 0.4921157237947115 seconds remaining)

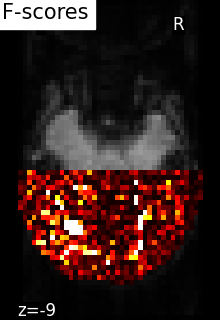

F-scores computation#

from nilearn.maskers import NiftiMasker

# For decoding, standardizing is often very important

nifti_masker = NiftiMasker(

mask_img=mask_img,

runs=session,

standardize="zscore_sample",

memory="nilearn_cache",

memory_level=1,

)

fmri_masked = nifti_masker.fit_transform(fmri_img)

from sklearn.feature_selection import f_classif

_, p_values = f_classif(fmri_masked, y)

p_values = -np.log10(p_values)

p_values[p_values > 10] = 10

p_unmasked = get_data(nifti_masker.inverse_transform(p_values))

Visualization#

Use the fmri mean image as a surrogate of anatomical data

from nilearn import image

mean_fmri = image.mean_img(fmri_img)

from nilearn.plotting import plot_img, plot_stat_map, show

searchlight_img = new_img_like(mean_fmri, searchlight.scores_)

# Because scores are not a zero-center test statistics, we cannot use

# plot_stat_map

plot_img(

searchlight_img,

bg_img=mean_fmri,

title="Searchlight",

display_mode="z",

cut_coords=[-9],

vmin=0.42,

cmap="hot",

threshold=0.2,

black_bg=True,

)

# F_score results

p_ma = np.ma.array(p_unmasked, mask=np.logical_not(process_mask))

f_score_img = new_img_like(mean_fmri, p_ma)

plot_stat_map(

f_score_img,

mean_fmri,

title="F-scores",

display_mode="z",

cut_coords=[-9],

colorbar=False,

)

show()

Total running time of the script: (0 minutes 52.183 seconds)

Estimated memory usage: 916 MB